Quote of the Day

I am not a product of my circumstances. I am a product of my decisions.

— Stephen Covey

Introduction

I was reviewing a test report today for an optical receiver with an integrated power measurement sensor. This sensor is not particularly accurate -- its accuracy was specified as within ± 3 dB of true. This is not good at all. As I looked at our test data, I immediately noticed that I could calibrate the sensor to get a much more accurate result. But as I continued to think about the problem, I decided not to calibrate the sensor. The decision was driven by the cost of calibration versus the value of a more accurate result to the customer. Let's look at how I made this decision -- no magic here -- just a common engineering tradeoff.

Background

Some Definitions

The Wikipedia has reasonable definitions for sensors and transducers.

- Sensor

- A sensor is a converter that measures a physical quantity and converts it into a signal which can be read by an observer or by an instrument.

- Transducer

- A transducer is a device that converts a signal in one form of energy to another form of energy. Energy types include (but are not limited to) electrical, mechanical, electromagnetic (including light), chemical, acoustic and thermal energy. While the term transducer commonly implies the use of a sensor/detector, any device which converts energy can be considered a transducer. Thus, a motor is a transducer.

I should also spend a bit of time discussion my views on calibration versus compensation.

- Calibration

- According to the Wikipedia, calibration is a comparison between measurements – one of known magnitude or correctness made or set with one device and another measurement made in as similar a way as possible with a second device. I view calibration as a measurement process designed to generate mathematical parameters needed to adjust a transducer's real output closer to some idealized value.

- Compensation

- According to the Wikipedia, compensation is a plan to mitigate the effect of expected side-effects or imperfections. I view compensation as the procedure that uses calibration data to "correct" the output of a transducer.

Sensor Errors

By the definitions given above, a sensor converts a physical quantity that cannot be directly measured into a form that can be directly measured. Unfortunately, all conversions are at some level imperfect. As long as the conversion errors do not vary with time, we can use simple table or polynomial-based error compensation approaches.

The degree of error compensation you require often drives the compensation approach you use. I remember talking to an engineer who was trying to measure temperature to a tiny fraction of a degree. His solved his problem using very time-consuming, high-accuracy temperature measurements and an assortment of piecewise, seventh-order polynomials to compensate for the errors in his sensors. My accuracy requirements are not so severe. I would like to be able to measure optical power from -10 dBm to -30 dBm with an accuracy of ±0.5 dBm.

In the case I will be discussing here, I have a sensor with a digital interface (i.e. there is a processor with an I2C interface embedded in the sensor) that will tell me the optical power present on a fiber. Ideally, if I have -20 dBm of optical fiber on the fiber, my sensor will tell me that I have -20 dBm on the fiber. If my sensor with digital interface were ideal, I would have an optical power measured versus actual optical power that is linear with slope 1 and intercept 0. Let's call the graph of optical power measured versus actual optical power my sensor's response curve.

Unfortunately, the digital value returned by the sensor and its interface are only specified by the vendor as accurate within ±3 dB. However, the sensor response is fairly linear -- the only problem is that the sensor plot has a slope that is not 1 and an intercept that is not 0 . This means that we can model the error using two parameters:

- gain error

This term represents the slope error (i.e. deviation from 1) in my sensor's response curve.

- bias error

This term represents the intercept error (i.e. deviation from 0) of my sensor's response curve.

Role of Calibration

When a sensor has a processor embedded with it, you could calibrate the sensor to minimize the reported error. In my situation here, the sensor appears to have a linear characteristic that could be corrected well by a simple linear transform. However, the sensor vendor chose not do that. Why?

I am pretty sure I know why -- they did not want to spend the money to calibrate the sensor. Calibration of an analog sensor can be costly because:

- You need certified source (i.e. optical power in this case) to stimulate the sensor.

- You need to re-qualify your test fixture periodically.

- It is expensive to build a repeatable, certifiable test fixture.

- You need to pay for test time.

However, you only need to pay this money if you want the accuracy. In my case, this sensor vendor made their sensor less expensive by not calibrating it. If I want more accuracy, I would need to calibrate it myself. Now I need to decide if the cost of calibration is worth it.

Analysis

I spent some time looking at the sensor. Here are my results.

Raw Sensor Data

Figure 1 shows the actual sensor response (i.e. power in versus measured power). Observe that the characteristic is quite linear over the -10 dBm to -30 dBm range that I am interested in. However, the line does not have a slope of 1 nor an intercept of 0.

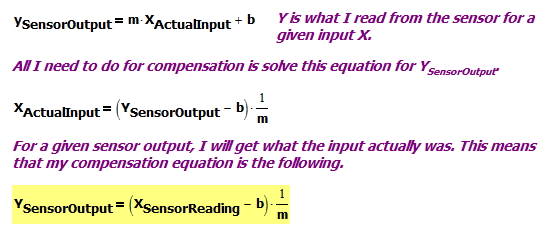

Compensated Sensor Data

Figure 2 shows the compensation approach. The compensation is based on the best-fit slope and intercept values measured in Figure 1.

Figure 3 shows my compensated sensor reading versus the actual optical power. Simple linear compensation looks very effective.

Conclusion

By spending money on calibration, I can reduce my optical power error from ±3 dBm to ±0.5 dBm. The cost was a ~1 minute of tester time, which means ~$2 per unit. While I prefer to have more accuracy, does it really matter to a customer? How will they use this information?

- They will use the power data to ensure that their received optical power is within the dynamic range of their receivers

Most customers (~95%) run their networks in the middle of the dynamic range of the system. Since we have 15 dB of dynamic range, ±3 dBm of inaccuracy is not important to most customers. Do we want to burden everyone with the expense of performance needed by a few -- probably not.

- They will use the power data to determine if any of their optical components are aging.

In this case, accuracy is not so important as precision. The customers will simply track how their optical power levels move with time. As long as the measurements are repeatable, they really do not need to be that accurate.

So I have decided not to calibrate the sensor. The uncalibrated sensor is accurate enough for 95% of the customer base. For that 5% that need an accurate sensor, we will need to provide them with an off-the-shelf test equipment option. However, the bulk of our customers will not need to pay for the extra accuracy.