The Problem

This is a story with a very happy ending. I have been overweight for most of my 50+ years. I have tried to diet unsuccessfully many, many times. It can be hard especially if you don't face the issue at hand and look in the mirror and say "I can't stop eating" so you can finally address what you need to do. Two years ago, I started a weight loss plan that resulted in me losing 103 pounds over a period of 9 months and achieving my weight loss goal. Moreover, I have been able to maintain a stable weight in the 14 months since I went off my weight loss plan and starting maintenance. I will use several blog posts to discuss what I did to lose this weight. There was no magic, but it was interesting.

What was different this time from all the other times? This time, I turned weight loss into a math problem.

Motivations

Ultimately, the key to long-term weight loss is behavior modification. The key to behavior modification is motivation. Three things happened that provided me the motivation that I needed to diet seriously.

- My youngest son was looking at some old pictures of me and commented that he had never seen me as thin as I was in some of the pictures. He did not say this in a mean manner, but it caused me to think about the example that I had set for him. I distinctly remember saying to myself that I can change this situation.

- Years before I had bought a life insurance plan that increased in cost every year. Because of my weight, it was expensive. Basically, every month I received a bill for being overweight and I did not like how it was rising. I wondered many things, if should I stick with it, do I need Final expense insurance, or would a change to a fixed-rate policy save money? I realized if I wanted the latter I had to lose weight.

- I hired a senior-level natural bodybuilder on to my engineering team. This gentleman literally changed my life. I have concluded that bodybuilders know a lot and that people should listen to what they say very carefully.

These are all good reasons, but the real reason came down to my family. When you get right down to it, most overweight people would like to lose weight, but simply doing something good for themselves is not sufficient motivation. You need to find a reason that is bigger than yourself. In my case, it was decided that I needed to do all that I could to ensure that I will be there when family needs me.

Timing

My doctor told me that I needed to have a baseline colonoscopy – one of the joys of turning 50. Since I was going to be empty anyway, I decided to turn my colonoscopy into a weight loss opportunity. My diet would begin the second week of November with my colonoscopy.

Since I am a person that always needs a date goal to strive for, I looked at the calendar and I could see a number of important dates in the near future.

- My wife and I were going on a cruise in March.

- My yearly physical was scheduled for May.

- My son was going to be back home on the fourth of July.

- My life insurance rate was scheduled to increase in August.

I chose July 4 as my date for hitting my goal weight because it was far enough in the future (9 months) that I would have a credible chance of losing 103 pounds. I would also be able to avoid my life insurance rate increase by qualifying for a cheaper policy. This meant that I needed to lose 11.4 lb/month. That sounded hard but doable. Anyone who has a life insurance policy like me will be aware of what you need to do to make sure you get a good deal. I know people who have shopped around for a while and checked out companies like Curo Financial to see what policies they can get so they are all set.

Life Insurance Math

I reviewed the life insurance policies of a number of companies: AAA, Prudential, Met Life, etc. All the policies had a common theme – they predicted your personal risk based on your Body Mass Index (BMI). They did not come right out and say BMI, but you could see that their tables were derived using the BMI equation.

BMI Basics

BMI is very simple formula that has major implications for the cost of your insurance.

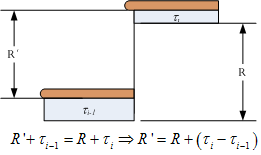

where m is the body mass in kilograms and h is the body height in meters. This formula is used around the world to assess the body mass situation of groups of people.

The medical folks assign labels to different BMI ranges.

| BMI Range | Category |

| ‹ 16.5 | Severly Underweight |

| 16.5–18.4 | Underweight |

| 18.5–24.9 | Normal |

| 25.0–29.9 | Overweight |

| 30.0–34.9 | Obese Class I |

| 35.0–39.9 | Obese Class II |

| › 40 | Obese Class III |

A person who is Obese Class III is often referred to as "morbidly obese." There are international variations on these thresholds.

BMI Trivia

Here are some commonly cited BMI values.

- Average American female supermodel BMI = 16.5 (see calculation below)

- Average American male model BMI (6 ft, 170 lb) = 23.1

- Average US female BMI = 26.5

- Average US male BMI = 26.6

Example Calculation

Let's walk through an example calculation by using the typical American female supermodel as an example.

- average height = 5' 10'' = 1.778 meters

- average weight = 115 lb = 52.163 kg

The calculation is straightforward.

Issues with BMI

I view BMI as a type of figure of merit. As a figure of merit, it definitely has some issues. For example, the units of BMI are kg/m2. Intuitively, this seems odd because the units look like a mass per unit area. If tall people were simply large versions large-versions of short people, it seems that the units should be kg/m3, which is the same as a density. Since muscle weighs more than fat, I can see where higher densities might be good and lower densities might be bad. While this makes intuitive sense, it is clear that tall people are not scaled up short people and that using a density form of measure would not work. Note that BMI is calculated the same for men and women, who definitely are constructed differently. So it seems that the use of height-squared is a compromise form of metric.

In fact, most medical experts say that while BMI has value with respect to populations, it has less value with respect to an individual. Individuals vary greatly with respect to muscle mass and skeletal frame. For example, the bodybuilder on my work team has a BMI of 33. This puts him in the Obese Class I category, but he has a body fat percentage that is less than 12% (when competing it is around 7%). I guarantee you that he is not obese by any definition other than BMI.

All this being said, since the insurance companies use BMI for medical underwriting, so we are stuck with it and have to use it. Its main advantage is that it is easy to compute.

My Personal BMI Situation

Alas, my personal BMI situation at the start of my diet was not good – it was 42.5. I was morbidly obese (the word "morbidly" makes me shudder). I had my work cut out for me. As it turned out, I hit my target weight on July 4 exactly.